Reinforcement Learning for Privacy: Training Local Models on the Anonymization Frontier

Today’s most capable LLMs offer powerful reasoning abilities, but using them raises serious privacy concerns. Queries often include sensitive information about work, health, finances, or personal life. Even when encrypted in transit, data sent to model providers can be logged, analyzed, or exposed in a breach.

A deeper issue is concentration of control. If personal data is consistently shared with LLM providers, it contributes to a centralized knowledge base managed by a few organizations. Protecting selective data sharing is therefore important for both personal privacy and broader economic sovereignty.

What about TEEs?

You might ask: why not just run models in Trusted Execution Environments (TEEs) with hardware-level privacy guarantees? We do serve open-source models in GPU-enabled TEEs, but this approach has fundamental limits:

- Best models are closed-source: Claude Opus/Sonnet 4, GPT-series, and others cannot be self-hosted.

- Hardware constraints: Leading open-source models (Qwen3-Coder, Kimi-K2) exceed 100B parameters. A single H100 cannot host them, and PCIe bus encryption in confidential compute only works within one GPU.

Today, privacy means choosing between capability and confidentiality: smaller models in TEEs for privacy, or powerful closed models with trade-offs.

The ideal personal AI combines a local reasoning coordinator with a network of models running locally, in TEEs, and as closed services, each serving a role in providing users maximum capability and privacy without compromise.

Attempts so Far

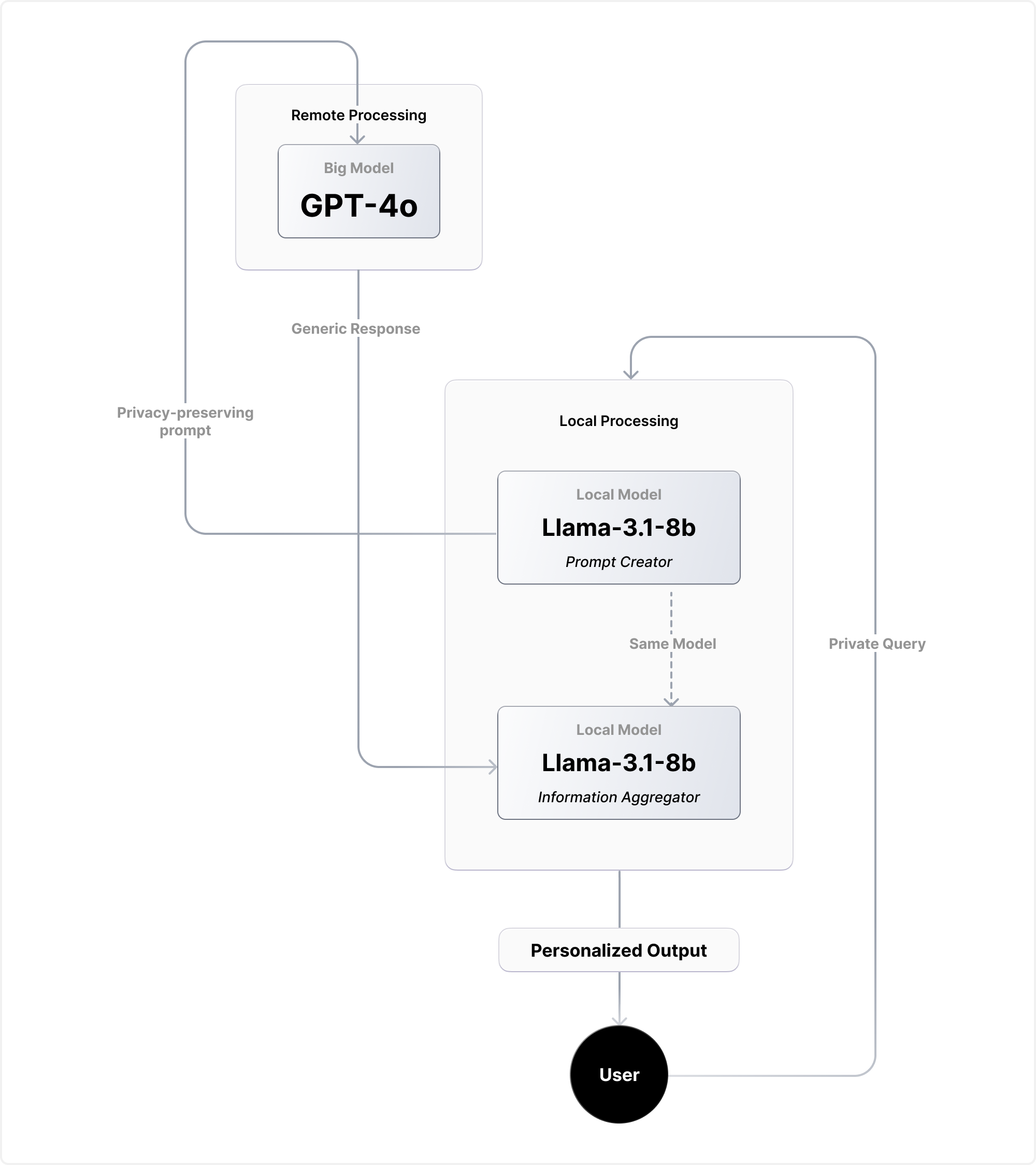

The PAPILLON system represents the closest attempt at privacy-conscious delegation to date. This approach chains local and cloud-based models together.

PAPILLON rewrites user queries with a local model into privacy-preserving prompts before sending them to powerful API-based models. In practice, it offered only partial protection: response quality dropped to 85%, and private information still leaked in 7.5% of queries with Llama-3.1-8B.

The problem lies in its free-form prompt generation. The local model frequently injects irrelevant details, misinterprets requests, or over-generalizes. This hurts both utility and privacy. This architectural flaw is compounded by the fact that running an 8B-parameter model locally is impractical for most users today.

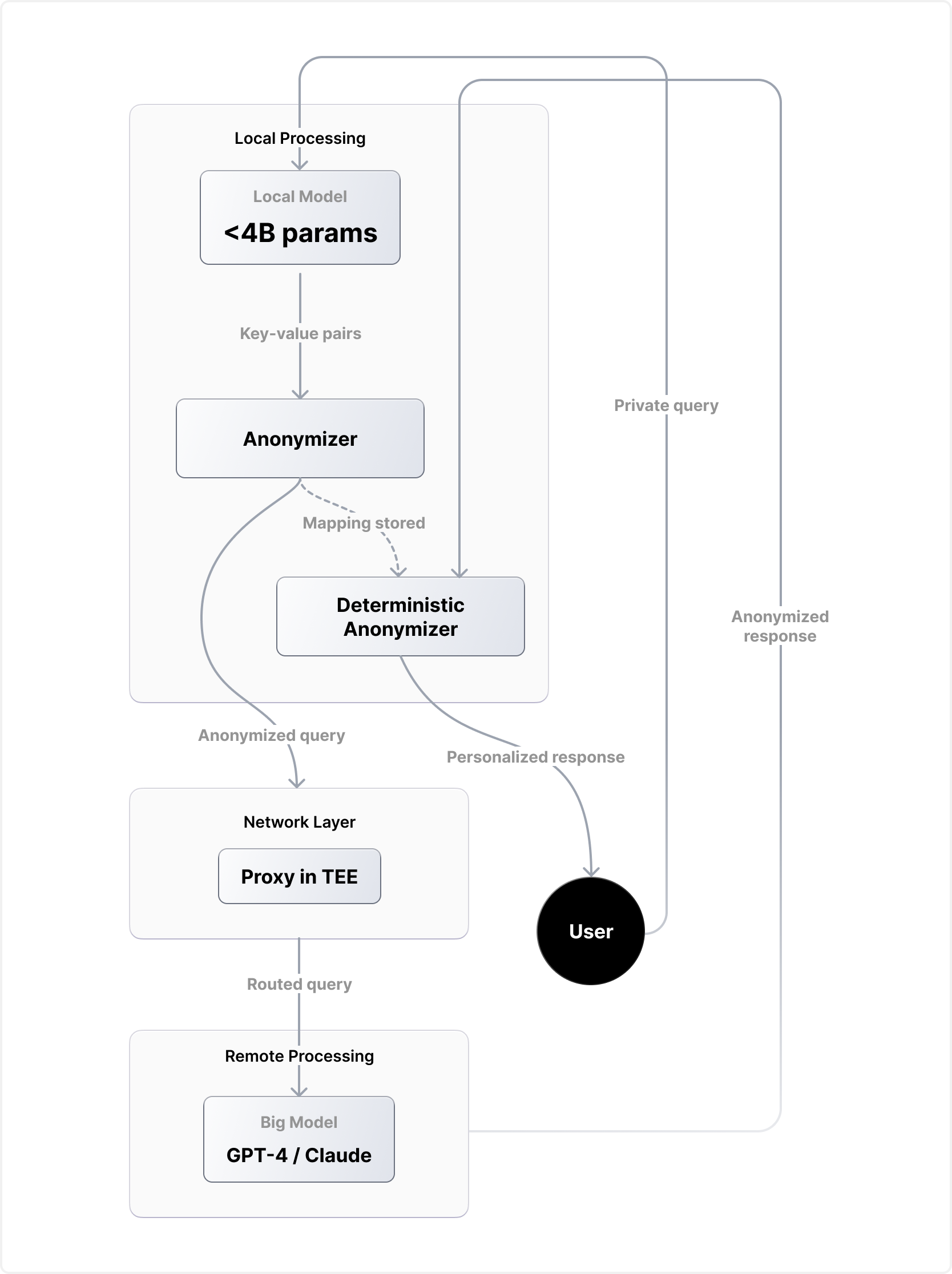

Our approach: anonymization with semantic preservation

Instead of handing model providers your raw data, our system automatically detects and replaces private information before your query ever leaves your device. Unlike approaches that rewrite entire prompts (often losing context), we take a surgical approach: replace only what’s necessary—consistently and accurately.

How it works

- Local detection: A lightweight on-device model identifies sensitive information in your query.

- Smart replacement: Each item is swapped with a semantically equivalent placeholder that preserves context.

- Secure routing: The anonymized query is sent through a privacy-preserving proxy to the external model.

- Automatic restoration: The response is automatically re-personalized with your original information.

Example in action

Three connected queries:

- "I discovered my manager at Google is systematically inflating sales numbers for the cloud infrastructure division"

- "I'm considering becoming a whistleblower to the SEC about financial fraud at my tech company - could this affect my H1-B visa status?"

- "My skip-level is Jennifer who reports directly to Marc - should I talk to her first or go straight to the authorities?"

What the model provider sees (three separate, unconnected queries):

- "I discovered my manager at TechCorp is systematically inflating sales numbers for the enterprise software division"

- "I'm considering becoming a whistleblower to the SEC about financial fraud at my tech company - could this affect my H1-B visa status?"

- "My skip-level is Michelle who reports directly to Robert - should I talk to her first or go straight to the authorities?"

Connected together, these queries would let Google instantly identify the whistleblower - there's likely only one H1-B employee in cloud infrastructure who could have sent these queries. But as three anonymous queries from different "people", one gets solid legal advice while staying completely protected. The model provider doesn't know these queries are related, but still provides help.

The privacy guarantees

Content-level protection

Anonymization follows these principles:

- Personal names → replaced with culturally and contextually similar alternatives

- Company names → fictional entities of the same industry and size

- Locations (<100k population) → mapped to synthetic equivalents

- Dates and times → shifted consistently to preserve relative timing

- Financial amounts→ adjusted slightly to maintain context

- Identifiers (emails, phone numbers, URLs) → randomized but format-valid

Network-level protection

Even perfect anonymization can leak through usage patterns, so we add network safeguards:

- TEE proxy: Queries are encrypted and routed through intermediate nodes hosted in Trusted Execution Environments, which cryptographically guarantee no logging or storage.

- Traffic obfuscation: Queries blend with thousands of others, making individual tracking statistically infeasible at scale.

Training small models for surgical PII replacement

We train lightweight, on-device models to identify and replace private information with high precision. Unlike full-prompt rewriting or blunt redaction, our models make targeted, surgical replacements of specific PII while leaving the rest intact. By ensuring replacements are semantically similar, we preserve query intent while preventing PII exposure to the model provider.

The anonymization task

We train models for a tool-calling task: given an input query, the model must identify PII requiring replacement and produce a structured tool call with appropriate substitutions. Here are two examples showing expected behavior:

Example with PII replacement:

Original query: "Afternoon Mariana, found excellent resources on elderly mental health. Set up an account with username nqampqqsetruqdf5671 to access it."

Model response: <tool_call>

{"name": "replace_entities", "arguments": {"replacements": [

{"original": "Mariana", "replacement": "Elara"},

{"original": "nqampqqsetruqdf5671", "replacement": "xplorvzjyfzrtg9824"}

]}}

</tool_call>

Example with no PII replacement needed:

Original query: "Few inventions have had as much impact as Thomas Edison's early work in electricity."

Model response: <tool_call>

{"name": "replace_entities", "arguments": {"replacements": []}}

</tool_call>

Notice how the model correctly leaves Thomas Edison untouched as a public historical figure. This distinction between private and public information is essential for protecting privacy while preserving utility.

Evaluation criteria: the LLM judge

To train and evaluate our models, we built a comprehensive LLM judge that scores anonymization quality on a 0-10 scale. This is possible because state-of-the-art LLMs already perform well on anonymization tasks. The judge evaluates five dimensions:

- JSON format: Proper tool call formatting

- PII identification: Correct detection of private information requiring replacement

- Replacement granularity: Only specific PII entities (names, numbers, addresses) replaced, not full sentences

- Replacement quality: Substitutions that preserve context and meaning

- Following rules: Following explicit replacement guidelines

Nuanced rules by category:

- Names & organizations: Replace private individuals with culturally/contextually appropriate alternatives; leave public figures untouched.

- Monetary values: Scale amounts by 0.8–1.25× to keep magnitude; leave public prices unchanged.

- Dates & times: Shift private dates consistently; keep historical dates and holidays intact.

Crucially, the judge heavily penalizes models that replace entire sentences or phrases instead of specific PII entities. Good anonymization is surgical and targeted.

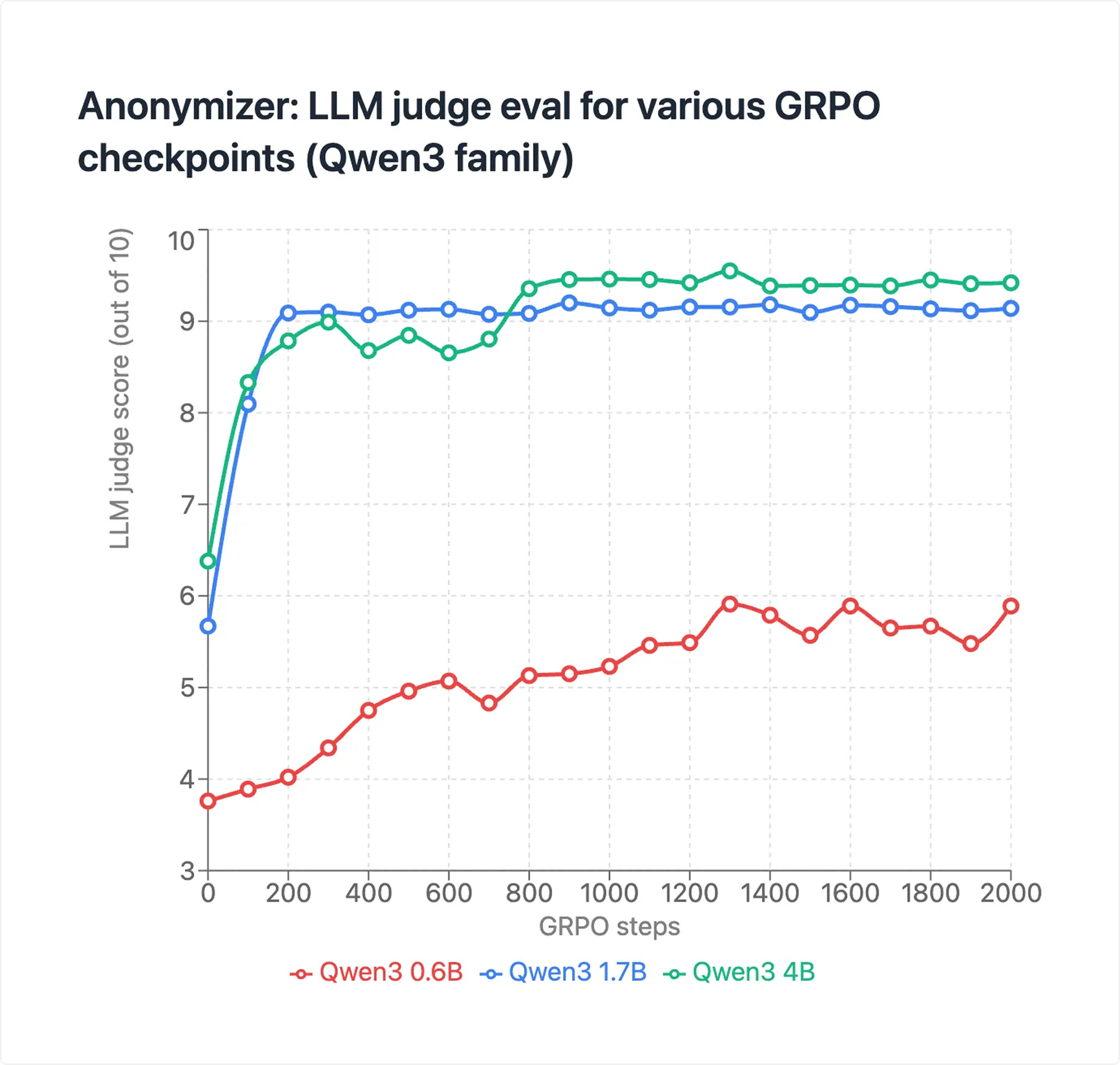

Dataset creation and initial training

We built a dataset of ~30,000 samples covering both PII replacement and non-replacement across all categories, split into 70% training, 20% validation, and 10% test.

Supervised fine-tuning (SFT) on the Qwen3 family showed modest gains:

- 0.6B model: <1/10 → 3.76/10

- 1.7B model: ~2/10 → 5.67/10

- 4B model: ~4/10 → 6.38/10

This is encouraging but far from the target. For reference, GPT-4.1 self-evaluates at 9.77/10.

Other models and RL techniques

We tested SmolLM (HF), LFM models, and Llama 3.2 1B, but they underperformed compared to Qwen3 in SFT results.

We then applied Direct Preference Optimization (DPO) with 10k new samples, yielding a further +1.5–2 points over post-SFT Qwen3, but still below target.

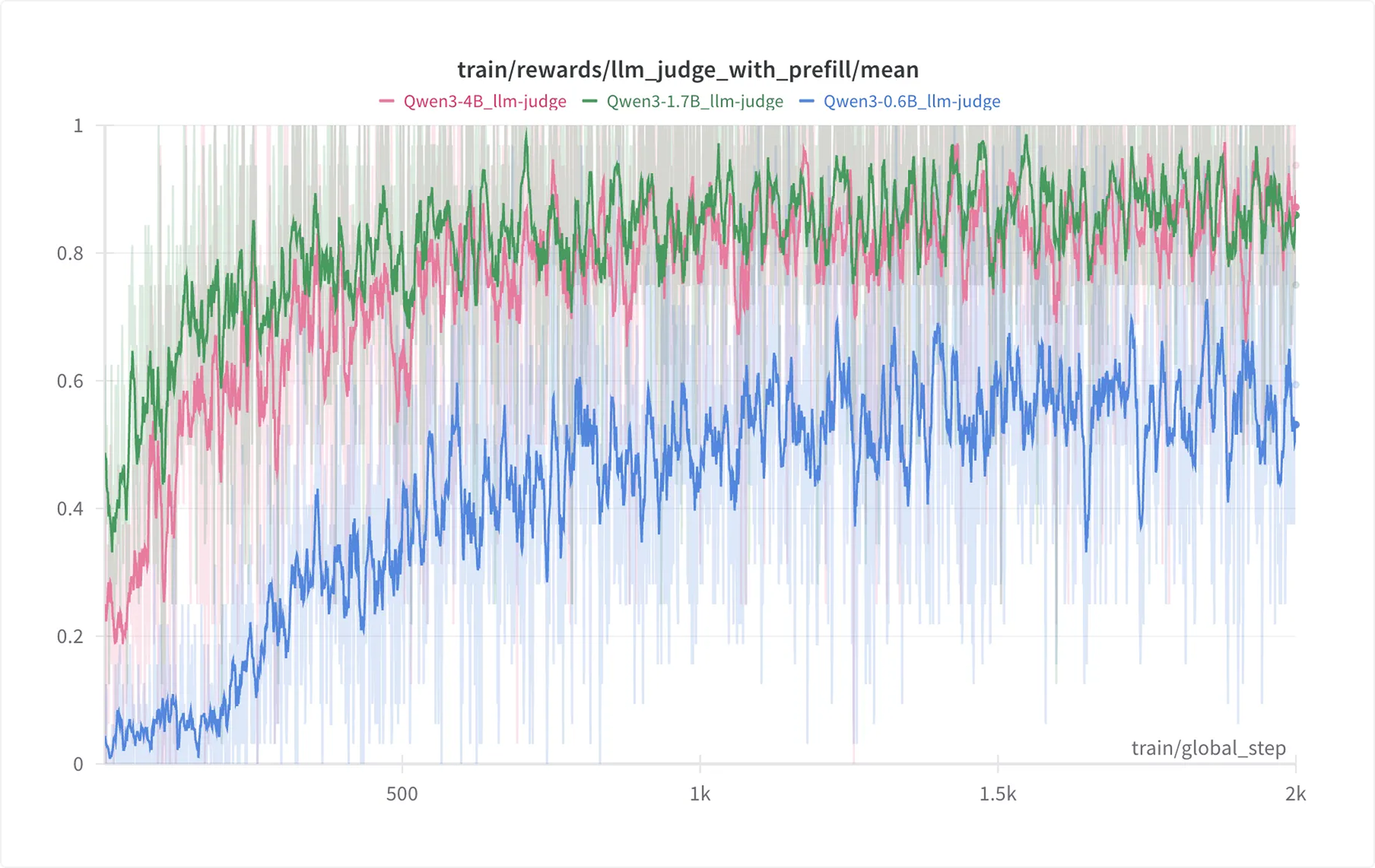

Breakthrough with GRPO with LLM judge

The breakthrough came with Group Relative Policy Optimization (GRPO) using half the SFT test set. Because PII replacement often has multiple valid answers, GRPO paired with an LLM judge (GPT-4.1) was ideal. GRPO learns from real-time judge scores on each generated response, driving significant performance gains.

The training process revealed key challenges:

- The judge initially confused cases where public figures' names should remain unchanged

- Date replacements occasionally shifted by centuries

- Models exploited reward hacks, e.g. outputting toy examples instead of anonymization

After iterative improvements to judge prompts and training, we reached the following results:

- Qwen3 4B: 9.55/10

- Qwen3 1.7B: 9.20/10

- Qwen3 0.6B: 5.96/10

Our 1.7-4B parameter models achieve anonymization performance comparable to GPT-4.1 (9.77/10), despite GPT-4.1 being reportedly 1000x larger.

Optimization for local deployment

We're now optimizing these models for practical deployment on consumer hardware. Current performance metrics include:

- Time to first token (TTFT): <250ms for both 1.7B and 4B models

- Total completion time: <1s for 1.7B, <2s for 4B

These optimizations leverage quantization techniques, KV caching, and speculative decoding paired with the 0.6B model variant. Our goal is deployment first on MacBooks, then eventually on mobile devices.

Full technical details and benchmarks will be available on arXiv in the coming weeks.

What this means for your privacy

To summarize, following are all the guarantees our system provides:

What we protect

- Identity: Model providers cannot link queries to you

- Relationships: Names, companies, and associations remain private

- Location: Your specific geographic information stays confidential

- Timing: Exact dates and schedules are obscured

- Financial data: Amounts and transactions are protected

- Writing patterns: Network routing prevents behavioral fingerprinting

What remains visible

- General topics: If queries are sent to a closed model provider, the LLM still knows about the specific query - e.g. coding help, meeting prep

- Language and structure: grammatical structure and language remain unchanged

- Public information: Facts and common knowledge untouched

Performance and user experience

We believe a personal AI should come with no privacy compromises. Our goal is to deliver the best possible product experience: you shouldn’t have to worry about what you say, which model to use, or whether you’ll be judged or censored.

- Latency: <500ms overhead (targeting <100ms in future versions)

- Quality: >99% response parity with direct API usage

- Reliability: Consistent, accurate replacements

- Compatibility: Works with any text-based LLM API

Understanding the limitations

Our approach greatly improves privacy, but some limits remain:

- Network strength: Privacy routing depends on either a relay network (like Tor) or TEEs (with a hardware trust assumption). For queries hitting closed-source models, anonymity also scales with overall request volume.

- Trust boundaries: Local anonymization and routing still require trust. While anyone can build from source and verify execution, users are ultimately trusting our infrastructure to behave as reported.

- Inference limits: Extremely unique queries may remain theoretically traceable unless processed by TEE-only models. Over time, this will improve as increasingly powerful models become deployable inside TEEs.

We are developing a local router model that automates these decisions, so users never need to think about them. In the coming years, we envision a future with millions of models exposed as tools, coordinated by a small local reasoning system that routes, splits, and solves requests without ever compromising privacy.

Making an informed decision

Enchanted, our public-facing app built on this anonymization work, is designed for users who:

- Rely on powerful LLMs for sensitive tasks

- Want strong, practical privacy protections

- Accept the small trade-offs between utility and anonymization (we’re close to eliminating them)

By combining local anonymization with network-level privacy, Enchanted provides multiple, independent layers of protection against privacy attacks.

Use Enchanted today and reclaim your intelligence: Enchanted on the App Store