Silo upgrades: new web app, encrypted sync, deep research

Silo is no longer iOS only. A new web app gives access to the same chats and models from any modern browser: https://silo.freysa.ai/.

The key constraint from day one has not changed: server infrastructure should never see plaintext conversations. The web app follows the same pattern as the iOS client. It talks only to encrypted storage and to a TEE-protected proxy that routes to the actual models. There is no logging layer watching prompts.

For users who already rely on Silo on iOS, the web app turns that same private assistant into something that can sit next to a laptop IDE, a Notion tab, or a dozen research PDFs.

End-to-end encryption and multi-device sync

The biggest architectural change in this release is encrypted sync. The goal was simple: enable history across devices without introducing any new party that can see plaintext.

High-level design

- The first iOS device generates an Application Keypair (AK).

AK.privatenever leaves the device keychain, which is protected by Face ID or Touch ID or passcode.AK.publicis uploaded so that the server can encrypt to it, but cannot decrypt.

- When the TEE-protected proxy finishes a response, it:

- Generates a fresh data encryption key (DEK).

- Encrypts the response with an AEAD scheme under that DEK.

- Wraps the DEK to

AK.public. - Stores only the ciphertext and the wrapped DEK in Firebase.

- The client device fetches the ciphertext, uses

AK.privateto unwrap the DEK, and decrypts locally.

The TEE sees plaintext inside the enclave during generation, then immediately encrypts it. Firebase only sees ciphertext and wrapped keys. The application backend never receives AK.private.

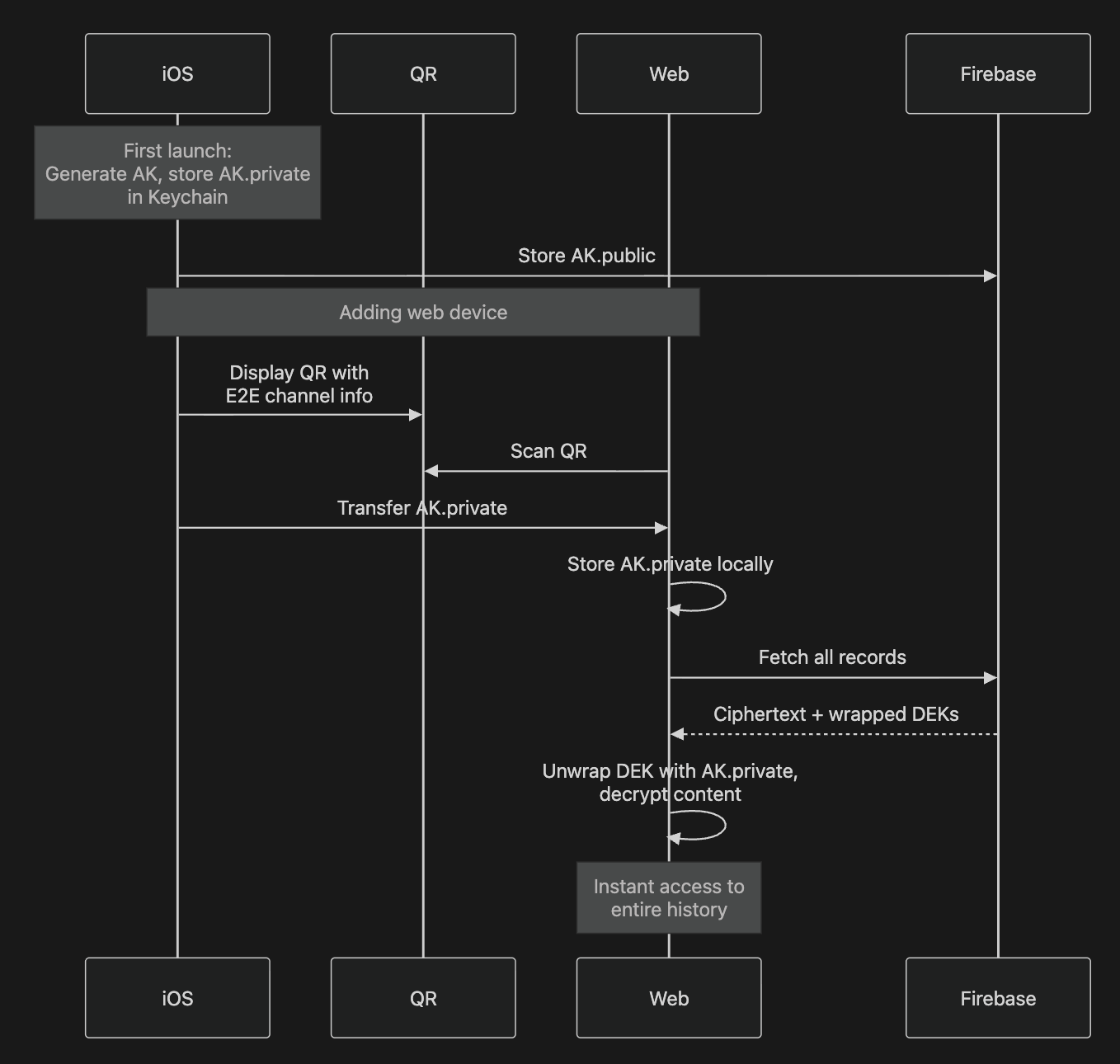

Multi-device via QR pairing

Multi-device access uses a simple QR link between devices rather than passkeys:

- The first device holds

AK.privatein its secure keychain. - When a browser or a second device needs to join, Silo opens an end-to-end channel between the two and encodes

AK.privateinto a one-time QR-based transfer. - The new device scans the QR code and imports

AK.privateinto its own secure storage. - From that point on, it can fetch any encrypted record, unwrap the DEKs, and read history.

The server never has access to AK.private and cannot add new devices on its own. If all devices are lost before linking another one, history is effectively unrecoverable, which is the expected tradeoff for real end-to-end encryption.

All historical chats that were previously only local to iOS can now be uploaded in encrypted form, using the same DEK wrapping scheme, so the entire history benefits from the same guarantees.

Deep Research that respects privacy

The second major addition is Silo Deep Research: multi-step research that runs through GLM 4.6, with all orchestration hosted inside a TEE and backed by a non-logging search API.

Deep research is not a single response. It is a process that scopes the task, issues many web queries, inspects sources, writes notes, and then produces an organized report. Silo’s implementation builds on LangChain’s Open Deep Research framework, which defines a three-phase agent pipeline (scope, research, write) and a benchmark called DeepResearch Bench for evaluation.

That open framework was used as a starting point and then heavily modified:

- The agent graphs were experimentally hardened for production stability.

- Tool-calling and browsing strategies were tuned around GLM 4.6.

- Extra checks were added to avoid low quality sources and reduce hallucinations.

The deep research server itself runs inside an AWS Nitro TEE, which provides hardware-based isolation of the process and its memory from the underlying host. All intermediate notes, browse results, and the final report live only inside the enclave during computation and are then stored in the same end-to-end encrypted storage format described above.

Web search uses a non-logging provider. No research queries are turned into profiles or advertising segments.

Why GLM 4.6 is a good deep research engine

Deep research depends heavily on reliable tool use and browsing. GLM 4.6 is designed for exactly that class of workloads. It is a frontier-scale model with a 200K context window and strong performance on agentic tasks and coding. (Z.ai)

Its predecessor GLM 4.5 is one of the top function-calling models on the Berkeley Function Calling Leaderboard (BFCL), which is a public benchmark that evaluates how well models can call tools in realistic scenarios. A model lineage that scores well on BFCL is a strong fit for research agents that must call web search, browsing tools, and parsing tools accurately.

To the team’s knowledge, Silo is the first consumer deep research implementation that pairs:

- A state-of-the-art tool-using model for multi-step research.

- A non-logging search backend.

- An inference server running entirely inside a hardware TEE.

- End-to-end encrypted storage for the resulting reports and all intermediate content.

New model lineup

The new release expands the set of available models inside Silo.

GLM 4.6 is now the primary open model, served in confidential computing mode on NVIDIA H200 GPUs for both regular chat and deep research. Alongside it, Silo now includes:

- Dolphin-mistral-24b (uncensored).

- GPT 5 Pro as a closed-source model behind Silo’s proxy layers.

- Existing open models such as DeepSeek R1 and Llama 3.3, running in confidential computing mode as before.

A note on uncensored models

Different users have different preferences about safety filters and how strict a model should be. We believe that filters on the intelligence should be a choice that sits as close to the user as possible.

To explore that space, Silo is experimenting with a privately hosted uncensored model: Dolphin-mistral-24b. It runs inside the same TEE and confidential GPU stack as other models, with the same network isolation and logging guarantees.

Web app, encrypted sync, deep research: how it fits together

Putting the pieces together:

- iOS and the new web app both act as clients of the same end-to-end encrypted storage.

- The TEE-protected proxy on the server side handles chat, tool calls, and now deep research, speaking to models like GLM 4.6 served in confidential computing mode.

- All responses are encrypted with fresh cryptographic keys and wrapped to client-held keys.

- New devices join through a QR-based key transfer, not through any server-controlled recovery mechanism.

- Deep research agents run in a separate Nitro TEE, using a non-logging search provider, and write their outputs into the same encrypted store.

The result is a personal AI system that can now follow a user from phone to browser without weakening the original promise: a private assistant by default, where plaintext lives on devices and inside hardware enclaves, not in log files.

What comes next

Some of the earlier roadmap remains in motion and is now easier to deliver on top of this foundation:

- The <1B parameter anonymizer model was initially deployed directly on iPhones, but extensive experiments showed that latency and overall experience varied significantly between older and newer devices. To provide a consistent, low-latency experience, the anonymizer is now being moved into a GPU-backed confidential computing proxy, so prompts can be anonymized with hardware-level privacy guarantees and without on-device delays.

- Multimodal models in confidential compute mode, wired into both iOS and the web app.

- Private uploads of images, with the same encrypted sync pipeline.

If you see any bugs with this major release, please report them to dev@freysa.ai!

Try it now:

- Webapp: https://silo.freysa.ai/

- iOS app: https://link.freysa.ai/appstore